TimeGPT Foundation Forecasting

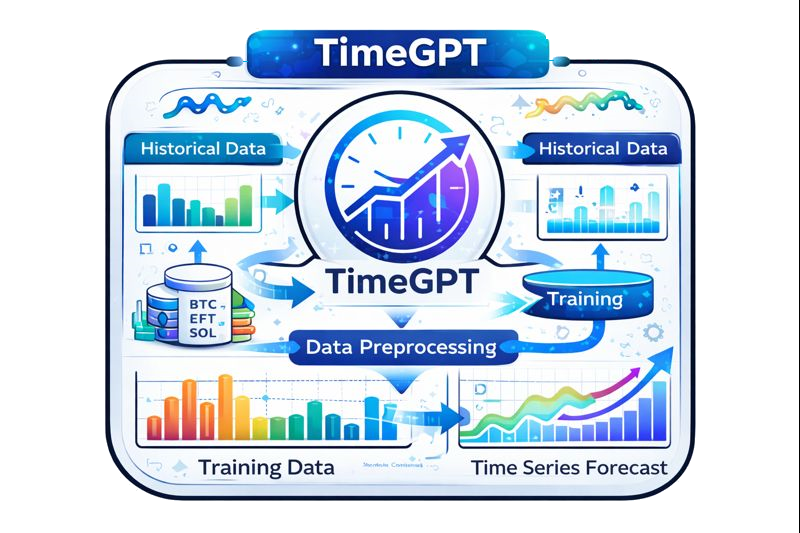

TimeGPT is a transformer based foundation model trained on massive multi-domain time series corpora. It learns general patterns like seasonality, shocks, and regime drift, then adapts quickly to specific crypto symbols with a short context window.

How TimeGPT Works

TimeGPT transforms past observations into embeddings, applies multi-head attention to capture long range dependencies, and predicts a distribution of future values. The model is trained on many markets, so it recognizes patterns such as volatility clustering and sudden liquidity shifts before fine tuning on a target symbol.

For crypto, we feed TimeGPT with price, volume, funding rates, and calendar signals, then project multiple horizons with uncertainty bands. That uncertainty becomes a confidence weight in the trading decision engine.

- Global pretraining for cross-market priors.

- Positional encodings for irregular time spacing.

- Quantile outputs for asymmetric risk modeling.

- Fast autoregressive decoding for real time updates.

Embed Context

Normalize price history, inject time features, and map into a shared latent space.

Attend Globally

Multi-head attention weights past segments based on relevance to the current regime.

Decode Horizon

Generate multiple step forecasts with probabilistic intervals and calibration checks.

Score Confidence

Translate uncertainty into a confidence signal for downstream risk sizing.

Core Equations

Attention based forecasting enables long range memory with scalable computation while keeping uncertainty front and center.

Where TimeGPT Fits

- Captures long range dependencies and irregular cycles.

- Multi horizon forecasts with calibrated uncertainty.

- Strong transfer learning across correlated assets.

- Requires careful normalization to avoid drift bias.

- Expensive to train compared to classical baselines.

- Attention can overfit short windows without gating.

TimeGPT is the long memory engine that powers mid horizon projections and signal validation.