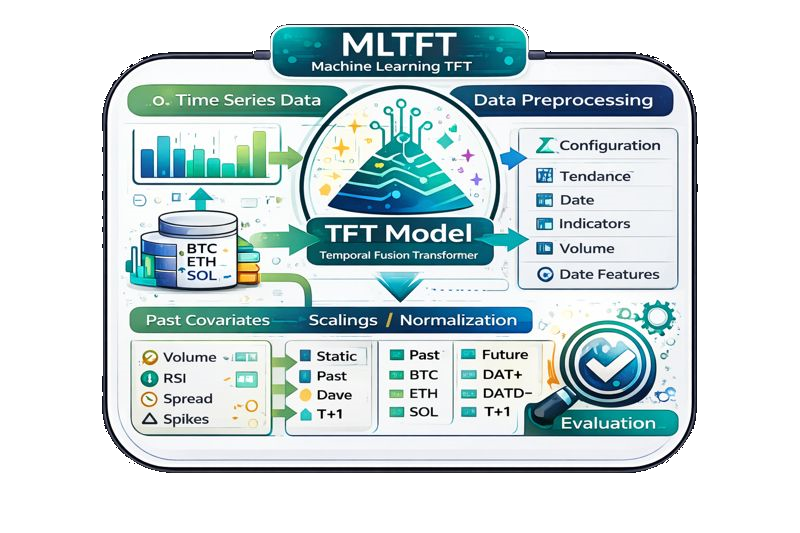

MLTFT Temporal Fusion Stack

MLTFT is our multi-layer temporal fusion transformer. It blends sequential attention, variable selection networks, and static context gating to merge prices, volume, order flow, and macro signals into a unified forecast.

How MLTFT Works

MLTFT extends the temporal fusion transformer by stacking multi-layer attention blocks and explicit variable selection. Each input channel receives a learned gate, so the model can amplify market drivers such as liquidity spikes while muting low signal noise.

Static context, such as exchange microstructure or asset category, is injected early to bias the network toward the correct regime. The result is a high resolution forecast that is stable across volatile conditions and responsive to regime transitions.

- Variable selection networks with learned gates.

- Temporal attention over encoded memory states.

- Static context enrichment to disambiguate regimes.

- Decoder heads for short, mid, and long horizons.

Feature Fusion

Merge price, volume, order flow, and macro signals into a shared embedding.

Gated Selection

Learn variable weights that adapt to shifting market regimes.

Temporal Attention

Focus attention on the most predictive historical segments.

Multi Horizon Output

Emit forecasts for multiple horizons with stability checks.

Core Equations

MLTFT uses gates and attention to select features and concentrate memory on the most useful time segments.

Where MLTFT Fits

- Handles multi-source signals with dynamic feature selection.

- Balances interpretability and high capacity modeling.

- Resilient to regime shifts through static context gating.

- Requires careful feature hygiene to avoid leakage.

- More compute intensive than classical models.

- Needs calibration to avoid attention drift.

MLTFT is the fusion layer for mixed signal forecasting and is often paired with TimeGPT for mid range confirmation.